AI is a notorious liar, and Microsoft now claims it has a solution for the problem. Understandably, that raises eyebrows. But there are reasons to be skeptical, too. Microsoft today announced Correction, a service that automatically corrects AI-generated text with factual errors. Proofreading first flags text that may contain errors (for example, a summary of a company's quarterly earnings report that may contain a misquote), and then compares the text against trusted sources (such as a transcription) to check the facts. The fix is available as part of Microsoft's Azure AI Content Safety API and can be used with any text-generation AI model, including Meta's Llama and OpenAI's GPT-4o.

A Microsoft spokesperson told TechCrunch that "repair is powered by a new process that uses small and large language models to match results to base documents." "We hope this new feature will be helpful to designers and users of generative AI in fields like medicine, where application developers consider accuracy of responses paramount. Google introduced a similar feature this summer to its AI development platform, Vertex AI, allowing customers to "base" models with data from third-party providers, their own datasets, or Google Search. But experts warn that such a root-and-branch approach doesn't address the root causes of hallucinations. "Trying to eliminate hallucinations from generative AI is like trying to eliminate hydrogen from water," says Oz Keyes, a doctoral candidate at the University of Washington who studies the ethical implications of new technologies. "It's a key part of how the technology works."

Models that generate text are hallucinating because they don't actually "know" anything. These are statistical systems that identify patterns in a series of words and predict which words come next based on the countless examples they are trained on. It follows that a model's answers are not answers, but simply predictions of how a question would be answered if it were present in the training set. As a result, models tend to play fast and loose with the truth. One study found that OpenAI’s ChatGPT answered medical questions incorrectly half the time. Microsoft’s solution is a pair of cross-referencing, copy-editor-esque meta models designed to highlight and rewrite hallucinations.

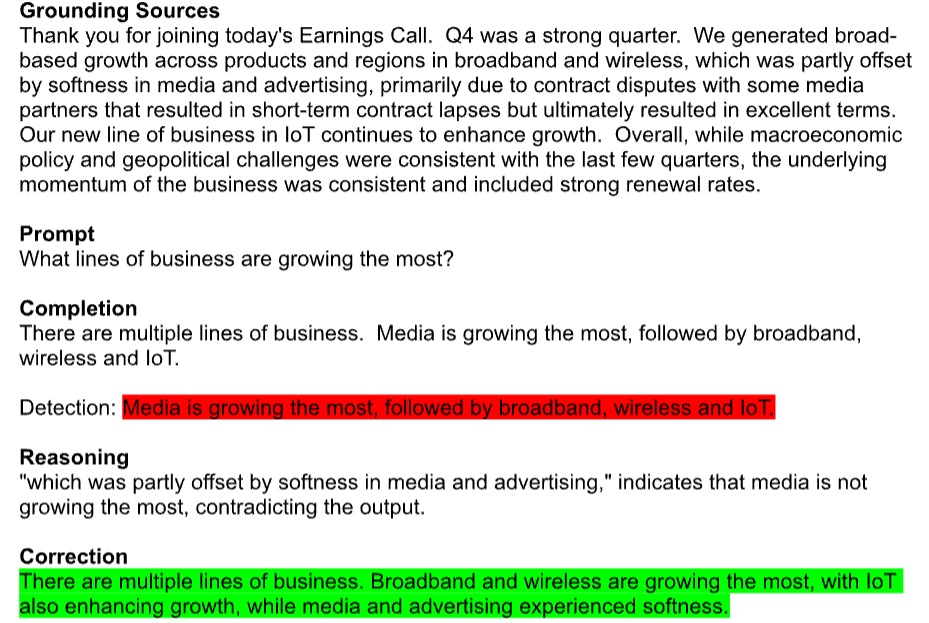

A classifier model looks for possibly incorrect, fabricated or irrelevant snippets of AI-generated text (hallucinations). If the classifier detects a hallucination, it plugs in a second model, a language model, and tries to correct the hallucination according to the specified "base document." "This patch significantly improves the reliability and validity of AI-generated content, helping app developers reduce user frustration and potential reputation risk," a Microsoft spokesperson said. "Fault detection doesn't solve the 'accuracy' problem, but it helps harmonize the generated AI results with the fault documentation."

Keyes is skeptical. "It may mitigate some issues, but it will introduce new ones," they said. As it turns out, Correction's hallucination detection library seems to be able to detect hallucinations, too. When asked for background on the Patch model, the spokesperson pointed to a recent paper from a Microsoft research group that described the model's prototype architecture. But the article omits important details, such as the datasets used to train the models.

Mike Cook, a researcher at Queen Mary University who specializes in AI, argued that even if the fixes work as advertised, they could exacerbate problems of trust and explainability around AI. The service can catch some errors, but it can also lull users into a false sense of security, making them believe the models are true more often than they actually are. "Microsoft, like OpenAI and Google, has created this problem where models rely on scenarios where they are often wrong," he said. “What Microsoft is doing now is repeating the mistake at a higher level, so that they go from 90% security to 99% security, and that 9% was fine. That will always be in the 1% of bugs that we haven't found yet."

Cook added that there was also an ironic business side to how Microsoft acquired Correction. While the feature itself is free, the "ground detection" needed to detect hallucinations so that Correction can check for them is only free for up to 5,000 "text entries" per month. Then it costs 38 cents per 1,000 text records. Still, the company has yet to generate significant revenue from AI. A Wall Street analyst downgraded the company's stock this week, citing skepticism about its strategy on AI in the long run.

A report in The Information says that many early adopters have suspended the deployment of the flagship generative AI platform, Microsoft 365 Copilot, because of performance and cost concerns. For one client using Copilot for Microsoft Teams meetings, the AI reportedly invented attendees and implied that calls were about subjects that were never actually discussed.

Accuracy, and the potential for hallucinations, are now among businesses’ biggest concerns when piloting AI tools, according to a KPMG poll. “If this were a normal product lifecycle, generative AI would still be in academic research and development, working to improve it and understand its strengths and weaknesses," Cook said. "Instead, we've deployed it across a dozen industries. Microsoft and others have decided to load everyone onto an exciting new rocket and assemble the landing gear and parachutes on the way to their destination.

.png)

.png)

.jpg)

.jpg)

.jpg)

.png)

.png)

.png)